Lyapunov stability

Various types of stability may be discussed for the solutions of differential equations describing dynamical systems. The most important type is that concerning the stability of solutions near to a point of equilibrium. This may be discussed by the theory of Lyapunov. In simple terms, if all solutions of the dynamical system that start out near an equilibrium point  stay near

stay near  forever, then

forever, then  is Lyapunov stable. More strongly, if

is Lyapunov stable. More strongly, if  is Lyapunov stable and all solutions that start out near

is Lyapunov stable and all solutions that start out near  converge to

converge to  , then

, then  is asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

is asymptotically stable. The notion of exponential stability guarantees a minimal rate of decay, i.e., an estimate of how quickly the solutions converge. The idea of Lyapunov stability can be extended to infinite-dimensional manifolds, where it is known as structural stability, which concerns the behavior of different but "nearby" solutions to differential equations. Input-to-state stability (ISS) applies Lyapunov notions to systems with inputs.

Contents |

History

Lyapunov stability is named after Aleksandr Lyapunov, a Russian mathematician who published his book "The General Problem of Stability of Motion" in 1892. Lyapunov was the first to consider the modifications necessary in nonlinear systems to the linear theory of stability based on linearizing near a point of equilibrium. His work, initially published in Russian and then translated to French, received little attention for many years. Interest in it started suddenly during the Cold War (1953-1962) period when the so-called "Second Method of Lyapunov" was found to be applicable to the stability of aerospace guidance systems which typically contain strong nonlinearities not treatable by other methods. A large number of publications appeared then and since in the control and systems literature. More recently the concept of Lyapunov exponent (related to Lyapunov's First Method of discussing stability) has received wide interest in connection with chaos theory. Lyapunov stability methods have also been applied to finding equilibrium solutions in traffic assignment problems following work by MJ Smith and MB Wisten.

Definition for continuous-time systems

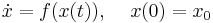

Consider an autonomous nonlinear dynamical system

,

,

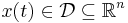

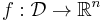

where  denotes the system state vector,

denotes the system state vector,  an open set containing the origin, and

an open set containing the origin, and  continuous on

continuous on  . Suppose

. Suppose  has an equilibrium

has an equilibrium  .

.

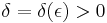

- The equilibrium of the above system is said to be Lyapunov stable, if, for every

, there exists a

, there exists a  such that, if

such that, if  , then

, then  , for every

, for every  .

. - The equilibrium of the above system is said to be asymptotically stable if it is Lyapunov stable and if there exists

such that if

such that if  , then

, then  .

. - The equilibrium of the above system is said to be exponentially stable if it is asymptotically stable and if there exist

such that if

such that if  , then

, then  , for

, for  .

.

Conceptually, the meanings of the above terms are the following:

- Lyapunov stability of an equilibrium means that solutions starting "close enough" to the equilibrium (within a distance

from it) remain "close enough" forever (within a distance

from it) remain "close enough" forever (within a distance  from it). Note that this must be true for any

from it). Note that this must be true for any  that one may want to choose.

that one may want to choose. - Asymptotic stability means that solutions that start close enough not only remain close enough but also eventually converge to the equilibrium.

- Exponential stability means that solutions not only converge, but in fact converge faster than or at least as fast as a particular known rate

.

.

The trajectory x is (locally) attractive if

for  for all trajectories that start close enough, and globally attractive if this property holds for all trajectories.

for all trajectories that start close enough, and globally attractive if this property holds for all trajectories.

That is, if x belongs to the interior of its stable manifold. It is asymptotically stable if it is both attractive and stable. (There are counterexamples showing that attractivity does not imply asymptotic stability. Such examples are easy to create using homoclinic connections.)

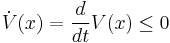

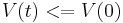

Lyapunov's second method for stability

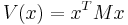

Lyapunov, in his original 1892 work proposed two methods for demonstrating stability. The first method developed the solution in a series which was then proved convergent within limits. The second method, which is almost universally used nowadays, makes use of a Lyapunov function V(x) which has an analogy to the potential function of classical dynamics. It is introduced as follows for a system having a point of equilibrium at x=0. Consider a function  such that

such that

with equality if and only if

with equality if and only if  (positive definite)

(positive definite) with equality if and only if

with equality if and only if  (negative definite).

(negative definite).

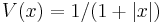

Then V(x) is called a Lyapunov function candidate and the system is asymptotically stable in the sense of Lyapunov (i.s.L.). (Note that  is required; otherwise for example

is required; otherwise for example  would "prove" that

would "prove" that  is locally stable. An additional condition called "properness" or "radial unboundedness" is required in order to conclude global asymptotic stability.)

is locally stable. An additional condition called "properness" or "radial unboundedness" is required in order to conclude global asymptotic stability.)

It is easier to visualize this method of analysis by thinking of a physical system (e.g. vibrating spring and mass) and considering the energy of such a system. If the system loses energy over time and the energy is never restored then eventually the system must grind to a stop and reach some final resting state. This final state is called the attractor. However, finding a function that gives the precise energy of a physical system can be difficult, and for abstract mathematical systems, economic systems or biological systems, the concept of energy may not be applicable.

Lyapunov's realization was that stability can be proven without requiring knowledge of the true physical energy, providing a Lyapunov function can be found to satisfy the above constraints.

Definition for discrete-time systems

The definition for discrete-time systems is almost identical to that for continuous-time systems. The definition below provides this, using an alternate language commonly used in more mathematical texts.

Let  be a metric space and

be a metric space and  a continuous function. A point

a continuous function. A point  is said to be Lyapunov stable, if, for each

is said to be Lyapunov stable, if, for each  , there is a

, there is a  such that for all

such that for all  , if

, if

then

for all  .

.

We say that  is asymptotically stable if it belongs to the interior of its stable set, i.e. if there is a

is asymptotically stable if it belongs to the interior of its stable set, i.e. if there is a  such that

such that

whenever  .

.

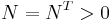

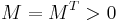

Stability for linear state space models

A linear state space model

is asymptotically stable (in fact, exponentially stable) if all real parts of the eigenvalues of  are negative. This condition is equivalent to the following one:

are negative. This condition is equivalent to the following one:

has a solution where  and

and  (positive definite matrices). (The relevant Lyapunov function is

(positive definite matrices). (The relevant Lyapunov function is  .)

.)

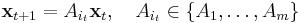

Correspondingly, a time-discrete linear state space model

is asymptotically stable (in fact, exponentially stable) if all the eigenvalues of  have a modulus smaller than one.

have a modulus smaller than one.

This latter condition has been generalized to switched systems: a linear switched discrete time system (ruled by a set of matrices  )

)

is asymptotically stable (in fact, exponentially stable) if the joint spectral radius of the set  is smaller than one.

is smaller than one.

Stability for systems with inputs

A system with inputs (or controls) has the form

where the (generally time-dependent) input u(t) may be viewed as a control, external input, stimulus, disturbance, or forcing function. The study of such systems is the subject of control theory and applied in control engineering. For systems with inputs, one must quantify the effect of inputs on the stability of the system. The main two approaches to this analysis are BIBO stability (for linear systems) and input-to-state (ISS) stability (for nonlinear systems)

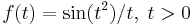

Example

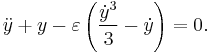

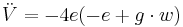

Consider an equation, where compared to the Van der Pol oscillator equation the friction term is changed:

The equilibrium is at :

Here is a good example of an unsuccessful try to find a Lyapunov function that proves stability:

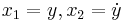

Let

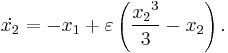

so that the corresponding system is

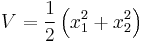

Let us choose as a Lyapunov function

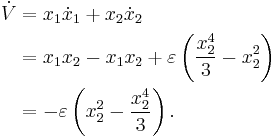

which is clearly positive definite. Its derivative is

It seems that if the parameter  is positive, stability is asymptotic for

is positive, stability is asymptotic for  But this is wrong, since

But this is wrong, since  does not depend on

does not depend on  , and will be 0 everywhere on the

, and will be 0 everywhere on the  axis.

axis.

Barbalat's lemma and stability of time-varying systems

Assume that f is function of time only.

- Having

does not imply that

does not imply that  has a limit at

has a limit at  . For example,

. For example,  .

.

- Having

approaching a limit as

approaching a limit as  does not imply that

does not imply that  . For example,

. For example,  .

.

- Having

lower bounded and decreasing (

lower bounded and decreasing ( ) implies it converges to a limit. But it does not say whether or not

) implies it converges to a limit. But it does not say whether or not  as

as  .

.

Barbalat's Lemma says:

- If

has a finite limit as

has a finite limit as  and if

and if  is uniformly continuous (or

is uniformly continuous (or  is bounded), then

is bounded), then  as

as  .

.

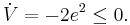

Usually, it is difficult to analyze the asymptotic stability of time-varying systems because it is very difficult to find Lyapunov functions with a negative definite derivative.

We know that in case of autonomous (time-invariant) systems, if  is negative semi-definite (NSD), then also, it is possible to know the asymptotic behaviour by invoking invariant-set theorems. However, this flexibility is not available for time-varying systems. This is where "Barbalat's lemma" comes into picture. It says:

is negative semi-definite (NSD), then also, it is possible to know the asymptotic behaviour by invoking invariant-set theorems. However, this flexibility is not available for time-varying systems. This is where "Barbalat's lemma" comes into picture. It says:

- IF

satisfies following conditions:

satisfies following conditions:

is lower bounded

is lower bounded is negative semi-definite (NSD)

is negative semi-definite (NSD) is uniformly continuous in time (satisfied if

is uniformly continuous in time (satisfied if  is finite)

is finite)

- then

as

as  .

.

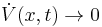

The following example is taken from page 125 of Slotine and Li's book Applied Nonlinear Control.

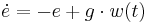

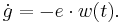

Consider a non-autonomous system

This is non-autonomous because the input  is a function of time. Assume that the input

is a function of time. Assume that the input  is bounded.

is bounded.

Taking  gives

gives

This says that  by first two conditions and hence

by first two conditions and hence  and

and  are bounded. But it does not say anything about the convergence of

are bounded. But it does not say anything about the convergence of  to zero. Moreover, the invariant set theorem cannot be applied, because the dynamics is non-autonomous.

to zero. Moreover, the invariant set theorem cannot be applied, because the dynamics is non-autonomous.

Using Barbalat's lemma:

.

.

This is bounded because  ,

,  and

and  are bounded. This implies

are bounded. This implies  as

as  and hence

and hence  . This proves that the error converges.

. This proves that the error converges.

References

- Lyapunov A.M. The General Problem of the Stability of Motion (In Russian), Doctoral dissertation, Univ. Kharkov 1892 English translations: (1) Stability of Motion, Academic Press, New-York & London, 1966 (2) The General Problem of the Stability of Motion, (A.T. Fuller trans.) Taylor & Francis, London 1992. Included is a biography by Smirnov and an extensive bibliography of Lyapunov's work.

- Letov A.M. Stability of Nonlinear Control Systems (Russian) Moscow 1955 (Gostekhizdat); English tr. Princeton 1961

- Kalman R.E. & Bertram J.F: Control System Analysis and Design via the Second Method of Lyapunov, J. Basic Engrg vol.88 1960 pp.371; 394

- LaSalle J.P. & Lefschetz S: Stability by Lyapunov's Second Method with Applications, New York 1961 (Academic)

- Parks P.C: Liapunov's method in automatic control theory, Control I Nov 1962 II Dec 1962

- Kalman R.E. Lyapunov functions for the problem of Lurie in automatic control, Proc Nat Acad.Sci USA, Feb 1963, 49, no.2,201-.

- Jean-Jacques E. Slotine and Weiping Li, Applied Nonlinear Control, Prentice Hall, NJ, 1991

- Parks P.C: A.M. Lyapunov's stability theory - 100 years on, IMA Journal of Mathematical Control & Information 1992 9 275-303

- Smith M.J. and Wisten M.B., A continuous day-to-day traffic assignment model and the existence of a continuous dynamic user equilibrium , Annals of Operations Research, Volume 60, 1995

External links

This article incorporates material from asymptotically stable on PlanetMath, which is licensed under the Creative Commons Attribution/Share-Alike License.